- People realize that they don’t actually know how to test properly. They know the buzzwords and catchphrases but, when it comes time to execute, they’re lost.

- Testing is a procrastinator’s dream come true. You can dismiss testing your marketing collateral for years while your business continues to run as expected. It’s the ultimate “I’ll do this later” fallacy.

Unfortunately, there are two sides to the “ignorance is bliss” coin.

On one side you have peace of mind believing that what you don’t know can’t hurt you. You’re not actively testing your marketing, yet your business is running smoothly. On the other side a nagging thought slowly eats away at you: What if business could be better? What if you could improve a landing page’s conversion rate from 1% to just 2%? That means doubling your results — opt-ins and sales — on just one page. That’s a huge opportunity for your business.

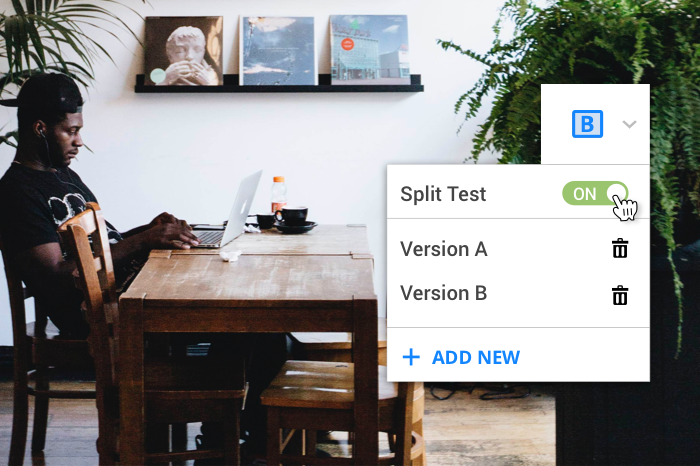

There’s a solution to this problem that doesn’t take a lot of time, energy or expertise. It’s called split testing.

Essentially, split testing takes your page traffic and automatically splits it evenly across multiple versions of one page to measure performance side-by-side. There are two primary ways to split test your landing pages: A/B testing and multivariate testing. They have different benefits and purposes, but they both give you valuable insight into the performance of your pages, allowing you to increase conversions in real-time by improving the quality of the final product.

A/B testing

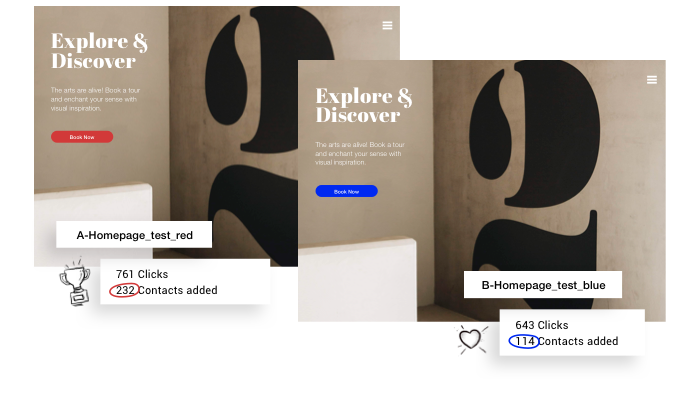

With A/B testing, you test just one element on one page at one time. For instance, you could test page performance based on the color of a button: red or blue.

The benefits of A/B testing

If you currently don’t do any testing, then A/B testing is a great way to start because the setup is simpler. It’s far less confusing to test one thing at a time, and results are black and white because you only have two data sets to compare.

Plus, if your page doesn’t get a lot of traffic, A/B testing will allow you to get more visits to your tests faster since there are only two versions to divide between.

Many marketers run A/B/C/D tests which, in a sense, are the same thing as an A/B test but with more variables. Each new variation you add decreases the speed at which you can make an educated decision based on your test results since it will take longer to get a high quantity of visitors to each variation.

Multivariate testing

With multivariate testing, you can test more than one element at a time, including all possible combinations of those elements. If you wanted to test two different images and two different headlines, you will end up with four different versions to account for the multiple variations of those elements.

The more variations you have, the better your chance of finding something that works. Think of it like baseball: the more swings you take, the better your chance of hitting a homerun.

The benefits of multivariate testing

A/B testing can limit your ability to find the right combination of page elements that work. Let’s say you performed a test with a red vs. blue submit button and found the blue button worked best. Now you want to try testing different form field labels, so you try two versions, both with the winning blue button.

What if the new form field labels + red button combo was the winning formula? You’ll never know unless you use multivariate testing. Also, if your page gets a decent amount of traffic, it can be beneficial to conduct multiple tests to eliminate several page elements responsible for poor page performance all at once.

Fair testing

Understanding the difference in testing styles is only part of the battle; you should also properly set the stage for a test that has usable results. That entails using fair testing, which means:

- Test only one element at a time OR every combination of elements.

- Run all versions at the exact same time.

- Wait until your page gets enough traffic to reach statistical confidence.

Marketing is an imperfect practice. As much as we try to turn it into an exact science, there will always be multiple variables at hand. We’re dealing with people, and people are affected by emotion, and emotion is unpredictable. That’s why you must have a control group, and everything must be equal for the test to be valid and fair. Let’s go over these guidelines:

Test only one element at a time OR every combination of elements.

With an A/B test, you should test only one variable at a time so you can confidently say which one caused the better or worse results. With a multivariate test, you’ll need to test all the possible combinations that could potentially occur if you want to elicit useful data.

Run all versions at the exact same time.

Some people make the mistake of turning off their original page and introducing a new page then comparing historical versus current data. This isn’t a valid comparison because the time of month, week or season could have an effect on the results. You’d have no way of knowing whether the results were caused by timing or by the elements you were testing.

For example, let’s say in January you directed all traffic to the landing page with the red button. In July, you think you’ve got a good amount of data, so you pause the campaign directing traffic to the red button and send everyone to the blue button landing page instead. At the end of the year, you compare the two results. Why does the blue button have so many clicks? It’s probably not just because of the color.

You’re not comparing data from the same period, meaning you can’t really jump to the conclusion that the blue button was the end all be all of your landing page optimization effort. Maybe your buyers are more active in the summer. Perhaps your marketing efforts increased at the end of the year. Using mismatched data to draw conclusions is like comparing apples to oranges.

Timing is a factor that should remain steady in order to accurately review your data. If you’re going to show a red and blue button, make sure you show them at the same time.

Wait until your page gets enough traffic to reach statistical confidence.

What if you only got 50 views on each version of this test? The chances that your results are a fluke are significantly higher than if you had gotten 10,000 visits to each, right?

This is called statistical confidence or statistical significance, meaning if we were to repeat our test, what level of confidence do we have that we’d get the same results? If version A of our test above got 10 visits and one conversion (10% conversion rate) and version B of our test got 11 visits and two conversions (18.18% conversion rate), how sure can we really be that the results are going to remain consistent after turning off version A?

If you’re curious, the answer is that we can be 70.72% sure, which isn’t really good enough.

There are many free statistical significance calculators that can help.

What to test first

The natural next question is: What do you test? The short answer: everything. You should test everything — not all at once, of course, because that wouldn’t be a fair test. Since “everything” isn’t a helpful answer, here are some great elements to start testing on your pages:

- Messaging: Positioning your product one way and one way only is a mistake. You never know what pain point will resonate the most or make the light bulb go on in the minds of your leads.

- Images: Different styles or types of photography and graphics might resonate differently with your target audience.

- Colors: Big red sales buttons are proven to work, but do they work for YOUR audience? There’s no way to know unless you test.

- Layout: Positioning your page elements correctly is a big deal. If your page requires any scrolling, you’ll want to be very particular about what you put at the top of your page in case people don’t scroll.

- Number of Items: Too much or too little of something can make a big difference. Figure out the sweet spot. How many CTA buttons can you add to your page to encourage visitors to take action without annoying them? What about the number of mandatory fields on your forms?

- Offers: Find out what offer speaks to your audience. Consider different products, pricing, or value-adds (bonus products or updates). Is the 2-for-1 deal catching their eye or was “25% off” doing the trick?

- Time: Maybe sending your message at 3 A.M. isn’t the best idea. Time plays a big role in how your message will be received. Experiment with sending your messages at different times based on your audience’s likely schedule.

You can test any element on your landing page, but focus on the ones you think will make a difference based on your audience and other marketing results.

The “Champion Vs. Challenger” split testing method

Now, how do you actually perform a split test? One way to approach it is to use the “champion vs. challenger” method. It not only makes split testing feel like a game, but it gives you valuable insight about your audience. Here’s how to do it:

Step 1

For your first test, start with an A/B or multivariate test with only four total options. Choose to test something that can have a big impact such as the headline or the location of the form on the page.

Step 2

Run your test until you have statistical confidence that one of the versions is the winner. That winner is now your champion.

Step 3

Introduce a new challenger version. Change one more element on the page and run another test. Run until you have statistical confidence.

Step 4

Whichever version wins is the new champion. If you want, you can increase the proportion of the traffic you’re sending to this version since you now have more confidence that it is a high performer.

Step 5

Repeat as many times as possible before the end of the campaign.

As you continue introducing new versions, you need to have some idea of why the winner won in order to iterate and improve. Try to see your landing page from the eyes of one of your leads or customers: What was more appealing about the version that won? Maybe moving your CTA up higher on the page made it easier to see so more viewers responded to it. Maybe one headline was more effective than another because it focused on a pain point that is more significant for the audience.

Even if you are wrong, you need a theory in order to run more tests. You will use this hypothesis to shape your next test. If the outcome disconfirms your hypothesis, come up with a new hypothesis. If it was supported, then try and add onto it.

Think like a psychologist and make theories based on why you think people are behaving the way they are. If you have a fitness company, maybe your audience doesn’t quite identify with the images of the 0% body fat bodybuilders. Instead, try using images of models who actually look like your audience, and see how that works. Map out your audience’s needs and pain points, then work backwards to develop your theories.

Lastly, get used to being proven wrong — and start enjoying it! One of the best things about testing is just how much you will learn about your audience. Everyone has assumptions, and sometimes proving them wrong can turn out to be highly valuable to your business.